adriana sá |

|

| AUDIO-VISUAL RESEARCH |

| Research summary |

|

||||||

Theoretical work: |

|||||||

| Creative principles | |||||||

| Parametric model | |||||||

| Parametric representation | |||||||

| Practical work: | |||||||

| Practice summary | |||||||

| AG#1 | |||||||

| Arpeggio-Detuning | |||||||

| AG#2 | |||||||

|

|||||||

Screen capture: AG#2 with 3D world #1 Please see this full-screen |

|

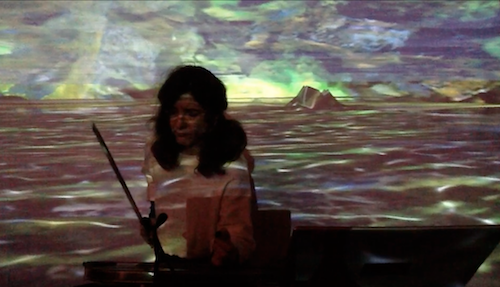

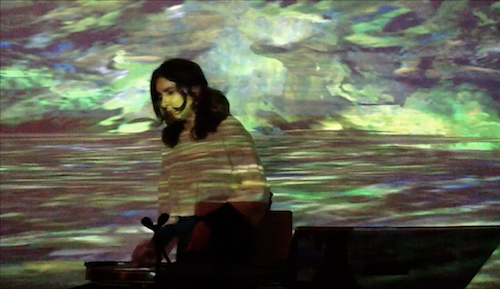

Playing Please see this full-screen |

Screen capture of AG#2 with 3D world #2 Please see this full-screen |

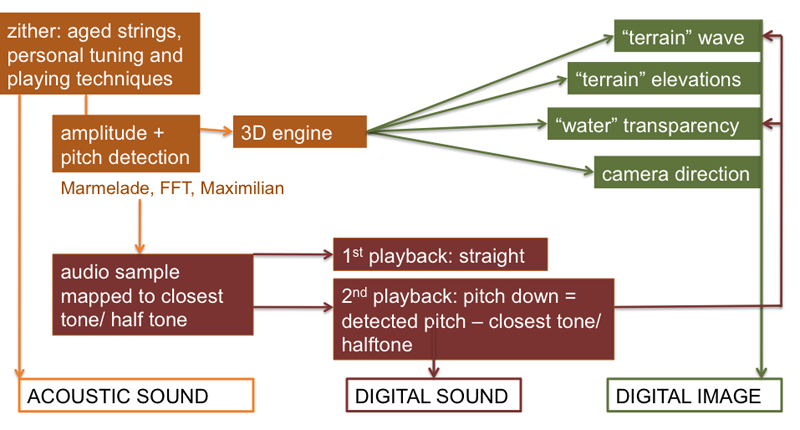

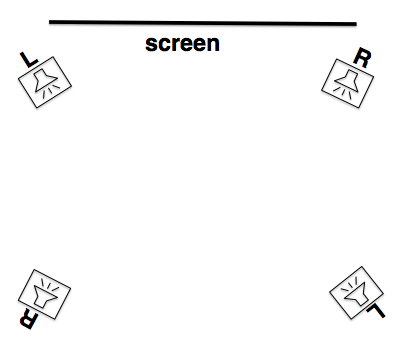

Technical diagram > how the audio-visual instrument works:

Creative strategies for sonic complexity:

| The audio-visual instrument has two interfaces for digital audio: the analysis from the zither input and a few switches assigned to audio sample banks. Whilst the switches provide control in defining musical sections, digital analysis creates unpredictability.

The detected pitch from the zither is mapped to the closest tone or half tone. The tone/ half tone is not played back itself. It is further mapped to a pre-recorded sound, which plays back twice. A pitch down value is applied during the second playback. This value equals the difference between the detected pitch and the closest tone or halftone. As the zither playing dwells with digital mappings and constraints, the music shifts in-between tonal enters. |

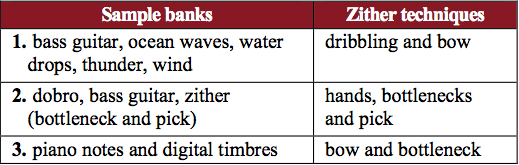

AG#2 includes three audio sample banks, each containing twelve pre-recorded sounds. I developed specific zither playing techniques in combination with each sample bank (table on the right). |

|

||

| AUDIO: | |||

| Demos & Collaborative works | |||

Creative strategies for visual continuty

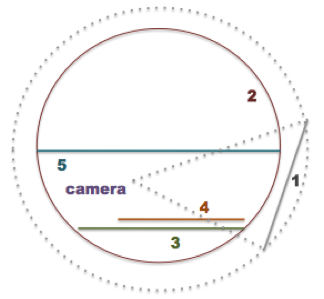

The projected image is a shifting 3D camera view over a painting-like, underwater landscape, which morphs with sound. Visual changes happen at a level of detail; the projected image creates a stage scene that reacts to the sound, without distracting attention from the music. My taxonomy of continuities and discontinuities shows that to keep the music in the foreground one must dispense with disruptive visual changes, i.e. radical discontinuities, which automatically attract attention. One should apply Gestaltist principles to visual dynamics: the image must enable perceptual simplification in order to provide a sense of overall continuity. There can be progressive continuities and ambivalent discontinuities. With progressive continuities, successive events display a similar interval of motion (Gestalt of good continuation). Ambivalent discontinuities are simultaneously continuous and discontinuous. At low resolution, the foreseeable logic is shifted without disruption. At high resolution, discontinuities become more intense. |

|

|

|

| ||||||||||

Creative strategies for a fungible audio-visual relationship:

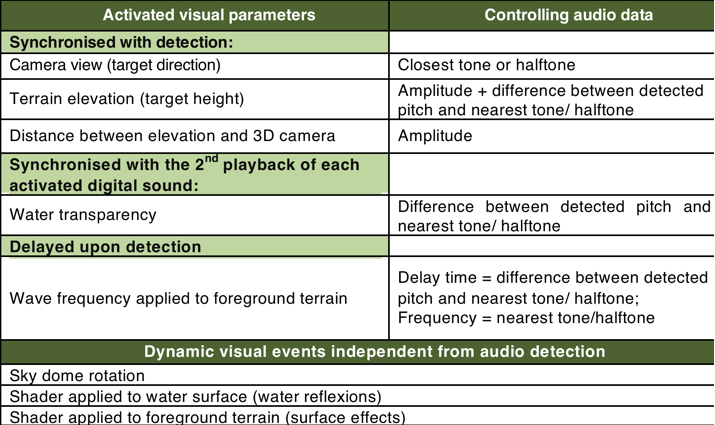

The audio-visual mapping is fungible: cause and effect relationships range from transparent to opaque, producing a sense of causation, and simultaneously confounding the cause and effect relationships. The table bellow shows that certain visual changes are synchronised with sound, other changes occur with variable delay upon detection, and there are also visual changes that do not depend on sound. The 3D camera direction shifts and the terrain elevations are synchronised with audio detection. A digital sound is emitted, a terrain elevation emerges, and the camera view shifts toward a new target direction.The water transparency parameter mapped to that same detection is synchronised with the second playback of the sample mapped to that same detection - the one that is pitched-down according to the difference between the detected pitch and closest tone/ half tone. The foreground terrain undulates according to variable frequencies, which change with variable delay upon detection. The sky dome and the water surface move consistently, at different pace, but independently from audio detection. |

||

|

||

| AG#2 implements 3D sound spatialisation features designed from scratch, considering the principles of sonic complexity and audio-visual fungibility. The elevations from the foreground terrain (see above) are sound emitters, which means that the digital sound output is routed dynamically to the speakers; it depends on the 3D camera position relatively to the position of the elevation in the digital 3D world. I use an inverted, doubble stereo system (see bellow), rather than a multi-channel sound diffusion system. The speed at which the sounds move through the system corresponds to the speed of the 3D camera. This produces a sense of causation. Yet, for example a visible sound emiiter on the left of the screen is simultaneousny emitted through the front-left speaker and the rear-right speaker. That confounds the cause and effect relationships. |  |

AG#2 has been developed along with a performance series called Included Middle. I performed with this version of the audio-visual instrument in Portugal and the U.K., solo and in collaboration with other musicians. Solo performances were in London, at Amersham Arms and at St. James Church/ Goldsmiths College; in Viseu, at Invisible Places/ Jardins Efémeros; and in Amsterdam, at STEIM. A collaborative performance was at Teatro Maria Matos, in Lisbon, with John Klima and Tó Trips. Two more were at Carpe Diem, also in Lisbon: a duo with Helena Espvall, and a trio with Nuno Torres and Nuno Morão. |

|

|

|

|

|

|

|

|

|